Table of contents of the article:

What is Unix?

Unix, registered as UNIX, is a multi-user, multitasking operating system (OS) designed for flexibility and adaptability. Originally developed in the 70s, Unix was one of the first operating systems written in the C programming language. Since its introduction, the Unix operating system and its derivatives have had a profound effect on the computer and electronics industry. offering portability, stability and interoperability across a range of heterogeneous environments and device types.

Unix history

In the late 60s, Bell Labs (later AT&T), General Electric, and the Massachusetts Institute of Technology attempted to develop an interactive time-sharing system called Multiplexed Information and Computing Service (Multics) that would allow multiple users to access a mainframe at the same time.

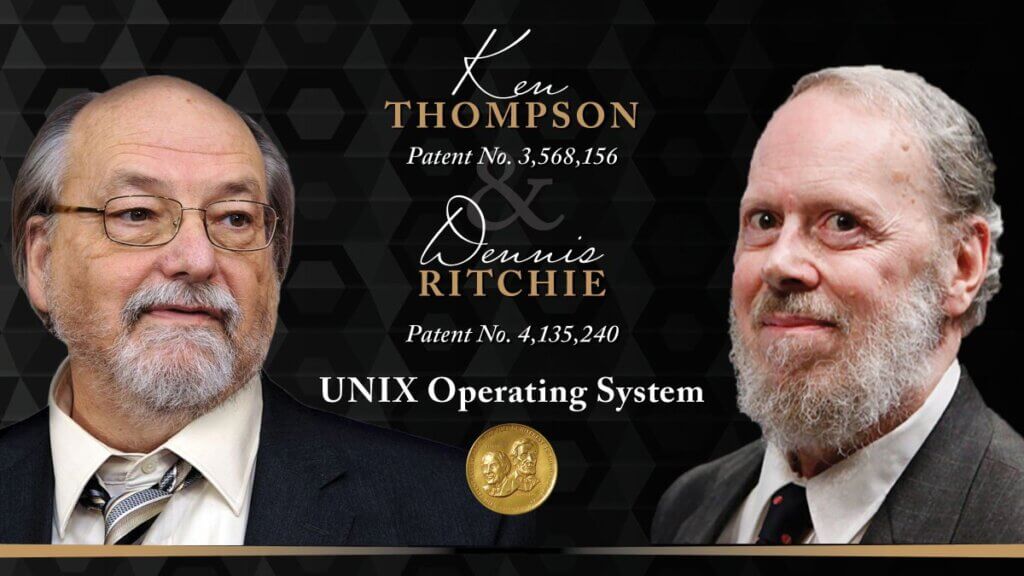

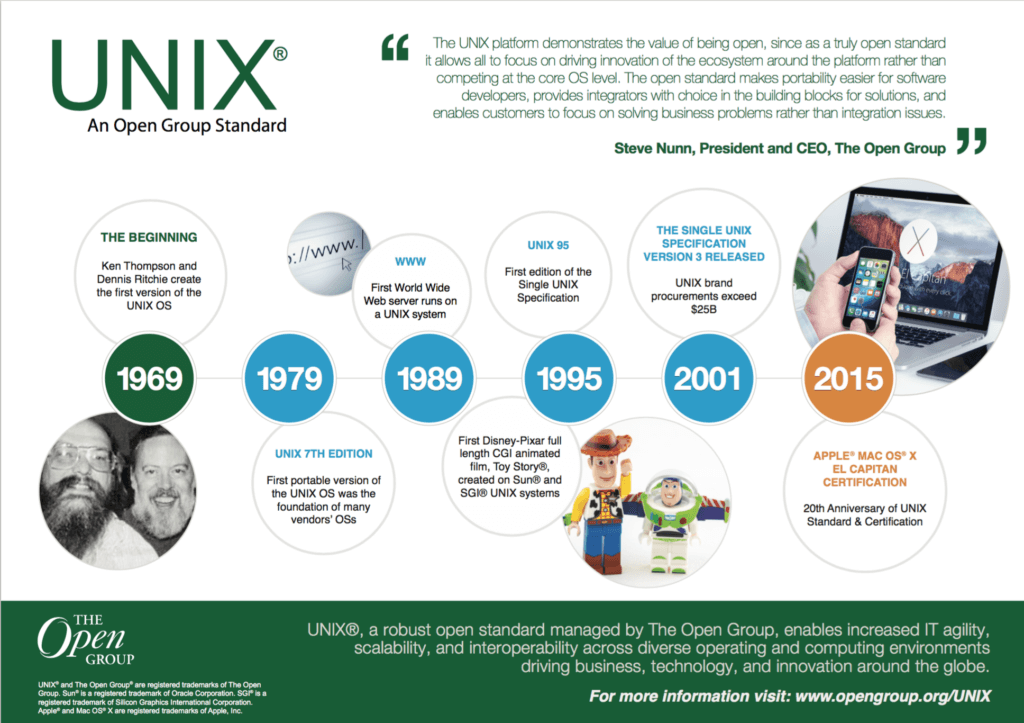

Disappointed with the results, Bell Labs withdrew from the project, but Bell computer scientists Ken Thompson and Dennis Ritchie continued their work, which culminated in the development of the Unix operating system. As part of this effort, Thompson and Ritchie recruited other Bell Labs researchers and together built a suite of components that provided a foundation for the operating system. The components included a hierarchical file system, a command line interface (CLI), and smaller utility programs. The operating system also brought with it the concepts of computer processes and device files.

A month later, Thompson implemented a self-hosting operating system with an assembler, editor, and shell. The name, pronounced YEW-nihks , was a pun based on the previous system: an emasculated or eunuch version of Multics. Unix was much smaller than what the original developers intended for Multics and was a single task system. Multitasking capabilities would come later.

Before 1973, Unix was written in assembler language, but the fourth edition was rewritten in C. This was revolutionary at the time because operating systems were thought to be too complex and sophisticated to be written in C, a high-level language. . This has increased Unix portability across multiple computing platforms.

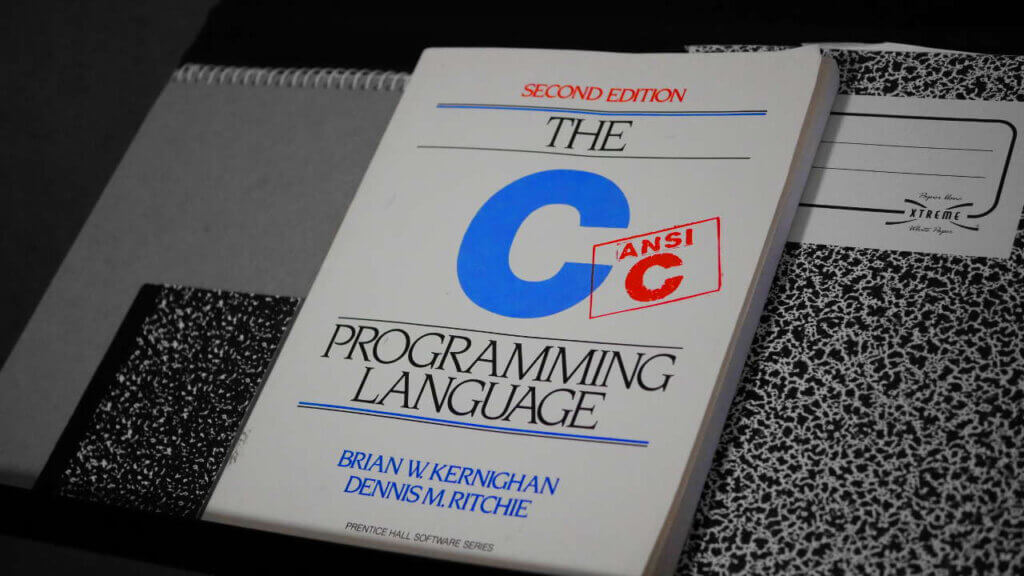

It is necessary to mention in order to disclose and understand the genius of these computer science pioneers that also the C language at the base of programming and of computer science itself was developed by Brian Kernighan and Dennis Ritchie, the same Dennis Ritchie inventor of UNIX. Famous is the text "C language - ANSI standard"Used in all university academies with a technological focus such as computer science, renamed" Kernighan & Ritchie "or more simply" K&R ".

In the late 70s and early 80s, Unix built up a strong following in academia, which led to commercial startups, such as Solaris Technologies and Sequent, adopting it on a larger scale. Between 1977 and 1995, the Computer Systems Research Group of the University of California, Berkeley, developed Berkeley Software Distribution (BSD), one of the earliest Unix distributions and the basis for many other Unix spin-offs.

More specifically, here are some fundamental steps of the various UNIX:

Unix V6, released in 1975, became very popular. Unix V6 was free and was distributed with its source code.

In 1983, AT&T released Unix System V which was a commercial version.

Meanwhile, the University of California at Berkeley has started developing its own version of Unix. Berkeley has also been involved in the inclusion of the Transmission Control Protocol / Internet Protocol (TCP / IP) network protocol.

The following were major milestones in UNIX history in the early 80s

• AT&T was developing its System V Unix.

• Berkeley took the lead on its own BSD (Berkeley Software Distribution) Unix.

• Sun Microsystems developed their own BSD-based Unix called SunOS and was later renamed Sun Solaris.

• Microsoft and Operation Santa Cruz (SCO) were involved in another version of UNIX called XENIX.

• Hewlett-Packard developed HP-UX for its workstations.

• DEC released ULTRIX.

• In 1986, IBM developed AIX (Advanced Interactive eXecutive).

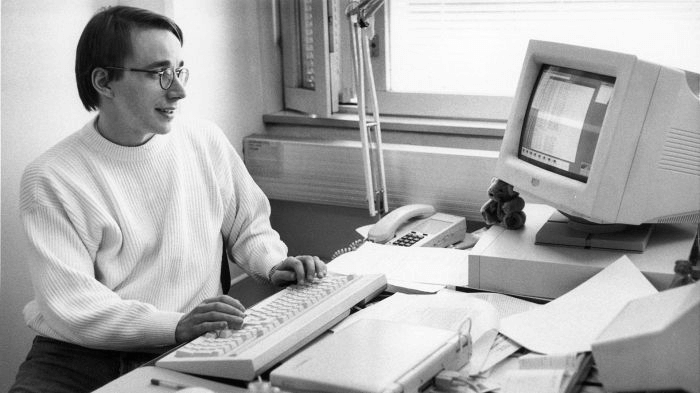

In 1991, Linus Torvalds, a student of the University of Helsinki, inspired by MINIX, created a Unix-based operating system for his PC. He would later name his he Linux project and make it available as a free download, which led to the growing popularity of Unix-like systems.

Today, a wide variety of modern servers, workstations, mobile devices, and embedded systems are run by Unix-based operating systems, including macOS computers and Android mobile devices that effectively use a Linux kernel.

What is Unix for?

Unix is a modular operating system consisting of a number of essential components, including the kernel, shell, file system, and a core set of utilities or programs.

The heart of the Unix operating system is the kernel, a main control program that provides services for starting and ending programs. It also handles low-level operations, such as memory allocation, file management, answering system calls, and scheduling tasks. Task scheduling is necessary to avoid conflicts when multiple programs try to access the same resource at the same time.

At the base of UNIX there are technical concepts and implementation choices that allow to manage in a very easy and elegant way the output of a program as input for another program and so on.

Through this approach it is possible (through the concatenation of many specific commands) to produce results and outputs for a very complex set purpose.

For example, you may have the real need (the case described was a real internal need) of having to download all the separate PDFs of annuity movements from a banking circuit, convert them into TXT, export some fields such as Date, Company name, Reason , Import and compile a file in XLS format.

A combo of wget with pdf2txt, AWK allows you to elegantly manage the need and solve the problem.

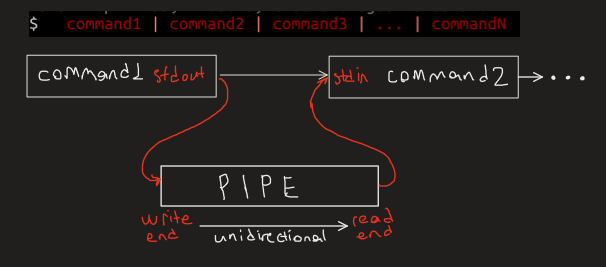

Unix supports the use of pipe (|), a powerful tool for linking multiple commands to create complex workflows. When two or more commands are linked together, the output of the first command is used as the input for the second command, the output of the second command is used as the input for the third command, and so on.

Users interact with the Unix environment through the shell, a CLI for entering commands that are passed to the kernel for execution. A command is used to invoke one of the available utilities. Each utility performs a specific operation, such as creating files, deleting directories, retrieving system information, or configuring the user environment.

Some Unix commands take one or more arguments, which provide a way to refine the behavior of the utility. For example, a user could enter the rm OldFIle.txt command. The command calls the rm utility, which deletes files in a directory. The command also includes the OldFIle.txt argument, which is the file to delete. When the user enters this command in the shell, the kernel runs the rm program and deletes the specified file.

Unix treats all file types as simple byte arrays, resulting in a much simpler file model than those of other operating systems. Unix also treats devices and some types of interprocess communication as files.

Unix concepts have been influential for a variety of reasons, including the following:

- Unix was a driving force behind the development of the Internet and the transformation of computing into a network-centric paradigm.

- Unix developers are credited for introducing modularity and reusability into the practice of software engineering and inciting the movement of software tools.

- Unix developers have also created a set of cultural rules for software development, called Unix philosophy , which has been very influential for the IT community.

Types of Unix

Unix became the first operating system that can be improved or enhanced by anyone, in part because it was written in the C language and has embraced many popular ideas. However, its initial success led to multiple variants that lacked compatibility and interoperability. To address these issues, a number of vendors and individuals came together in the 80s to standardize the operating system, first creating the Portable Operating System Interface standard and then defining the Single UNIX Specification (SUS).

Since then, Unix has continued to evolve, with the addition of new variants, some proprietary and some open source. Much of the progress has been the result of companies, universities and individuals contributing with extensions and new ideas.

The Unix license depends on the specific variant. Some variants of Unix are proprietary and licensed, such as IBM Advanced Interactive eXecutive (AIX) or Oracle Solaris, and other variants are free and open source, including Linux, FreeBSD, and OpenBSD. The UNIX trademark is now owned by The open group , an industry standards organization that certifies and marks UNIX implementations.

To be precise, however, Linux is not Unix, but it is a Unix-like operating system. The Linux system is derived from Unix and is a continuation of the foundations of Unix design. Linux distributions are the most famous and healthiest example of direct Unix derivatives. BSD (Berkley Software Distribution) is also an example of a Unix derivative.

Operating systems similar to UNIX or called UNIX Like

The term UNIX Like it is often used to describe the different variants of Unix, but there is no clear definition of what this term means. In general, it can refer to any operating system that has any relation to Unix, no matter how distant, including free and open source variations. Some software developers claim that there are three types of Unix-like systems:

- Operating systems historically linked to Bell Labs' original code base, such as the BSD systems developed by Berkeley researchers.

- Branded and branded Unix-like systems that meet SUS, such as HP-UX and IBM AIX. The Open Group has determined that these systems can use the Unix name.

- Unix-like functional systems, such as Linux and Minix, which behave consistently with Unix specifications. For example, they must have a program that manages logon and command line sessions.

The slow decline of UNIX.

The decline of Unix is "more an artifact of the lack of marketing appeal than the lack of any presence“Says Joshua Greenbaum, principal analyst at Enterprise Application Consulting . “Nobody sells Unix anymore, it's kind of a dead term. It's still around, it's just not built around anyone's strategy for high-end innovation. There is no future, and it's not because there's something inherently wrong with it, it's just that anything innovative will go to the cloud ”.

"The UNIX market is in inexorable decline“Says Daniel Bowers, director of research for Gartner's infrastructure and operations. "Only 1 in 85 servers deployed this year uses Solaris, HP-UX, or AIX. Most of the applications on Unix that can be easily ported to Linux or Windows have already been moved".

Most of what remains on Unix today are custom, mission-critical workloads in industries such as financial services and healthcare. Since these apps are expensive and risky to migrate or rewrite, Bowers predicts a long-tail decline in Unix that could last 20 years. "As a viable operating system, it's at least 10 years old because there's this long queue. Even 20 years from now, people will still want to handle it", he claims.

Gartner doesn't track the installation base, only new sales and the trend is down. In the first quarter of 2014, Unix sales were $ 1,6 billion. By the first quarter of 2018, sales were $ 593 million. In terms of units, Unix sales are low, but they are almost always in the form of high-end, heavily equipped servers that are much larger than the typical two-socket x86 server.

What's the future of Unix?

Unix and its variants continue to run on a wide range of systems, including workstations, servers, and supercomputers. Linux, in particular, has taken the lead in Unix-like implementations, gaining a strong presence in data centers and on cloud platforms. In addition, the operating system now runs on all 500 best supercomputers in the world. Linux is available as both free software and commercial proprietary software.

While Linux remains strong, particularly for enterprise servers, Unix itself has seen a decline in usage, partly due to the migration from computer platforms with reduced instruction sets to x86-based alternatives, which can run more workloads. and provide higher performance at a lower cost.

Experts predict that many organizations will continue to use Unix for mission-critical workloads, but will decrease their dependency on the system thanks to IT modernization and consolidation strategies. However, Unix is still the preferred system for many use cases, such as data center application support, cloud security, and vertical-specific software.

Future sales of Unix servers are expected to decline, but applications in the financial, government, and telecommunications sectors should continue to drive the use of Unix. Eventually, Unix may be abandoned entirely, but a long and slow decline will be required before that happens.